Abstract

Recent successes suggest that an image can be manipulated by a text prompt, e.g., a landscape scene on a sunny day is manipulated into the same scene on a rainy day driven by a text input ``raining''. These approaches often utilize a StyleCLIP-based image generator, which leverages multi-modal (text and image) embedding space. However, we observe that such text inputs are often bottlenecked in providing and synthesizing rich semantic cues, e.g., differentiating heavy rain from rain with thunderstorms. To address this issue, we advocate leveraging an additional modality, sound, which has notable advantages in image manipulation as it can convey more diverse semantic cues (vivid emotions or dynamic expressions of the natural world) than texts. In this paper, we propose a novel approach that first extends the image-text joint embedding space with sound and applies a direct latent optimization method to manipulate a given image based on audio input, e.g., the sound of rain. Our extensive experiments show that our sound-guided image manipulation approach produces semantically and visually more plausible manipulation results than the state-of-the-art text and sound-guided image manipulation methods, which are further confirmed by our human evaluations. Our downstream task evaluations also show that our learned image-text-sound joint embedding space effectively encodes sound inputs.

Method

We propose a novel audio-visual weakly paired contrastive learning, which prevents sound representation from learning biases between audio-visual from other videos in minibatches. Our approach increases the number of positive weak edges between sound and image which are sampled from other videos. By leveraging weakly connected audio-visual pairs which shares the same text description, we take advantage of the fact that videos having the same labels share common visual feature information. We propose to minimize Kullback–Leibler divergence between visual-text cosine similarity probability distribution and audio-visual cosine similarity probability distribution. Our weakly paired contrastive learning proceeds as follows. First, we sample additional visual data with text queries during the sound representation pre-training step. After that, our audio-visual similarity score aims to imitate the visual-text similarity score. Instead of estimating the identity matrix directly, the proposed method equalizes the audio-visual softmax-probability distribution and the visual-text softmax-probability distribution in minibatches. We find that our approach generates more robust images to fine-grained visual details, such as drastic color and hairstyle changes, than the previous method.

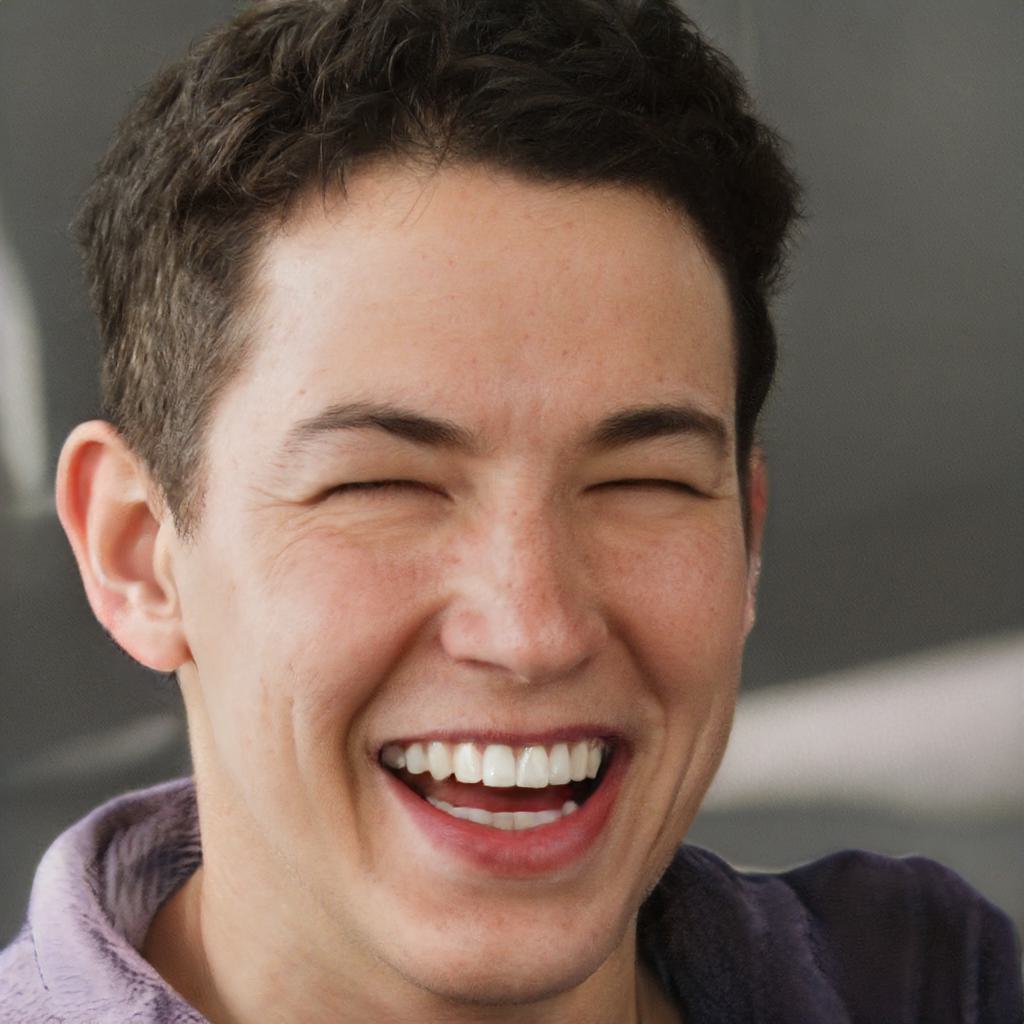

Manipulation Results - Successful Cases

→

🔊Giggling

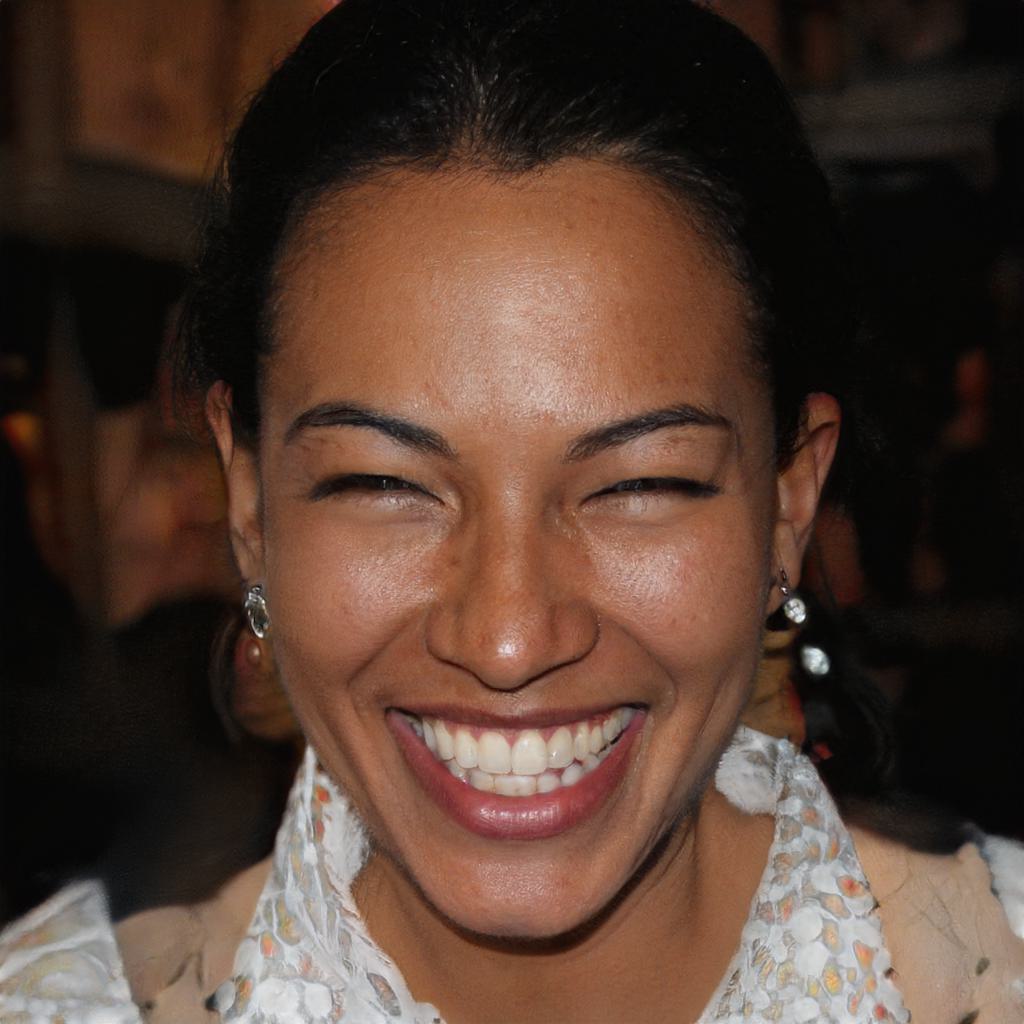

→

🔊Giggling

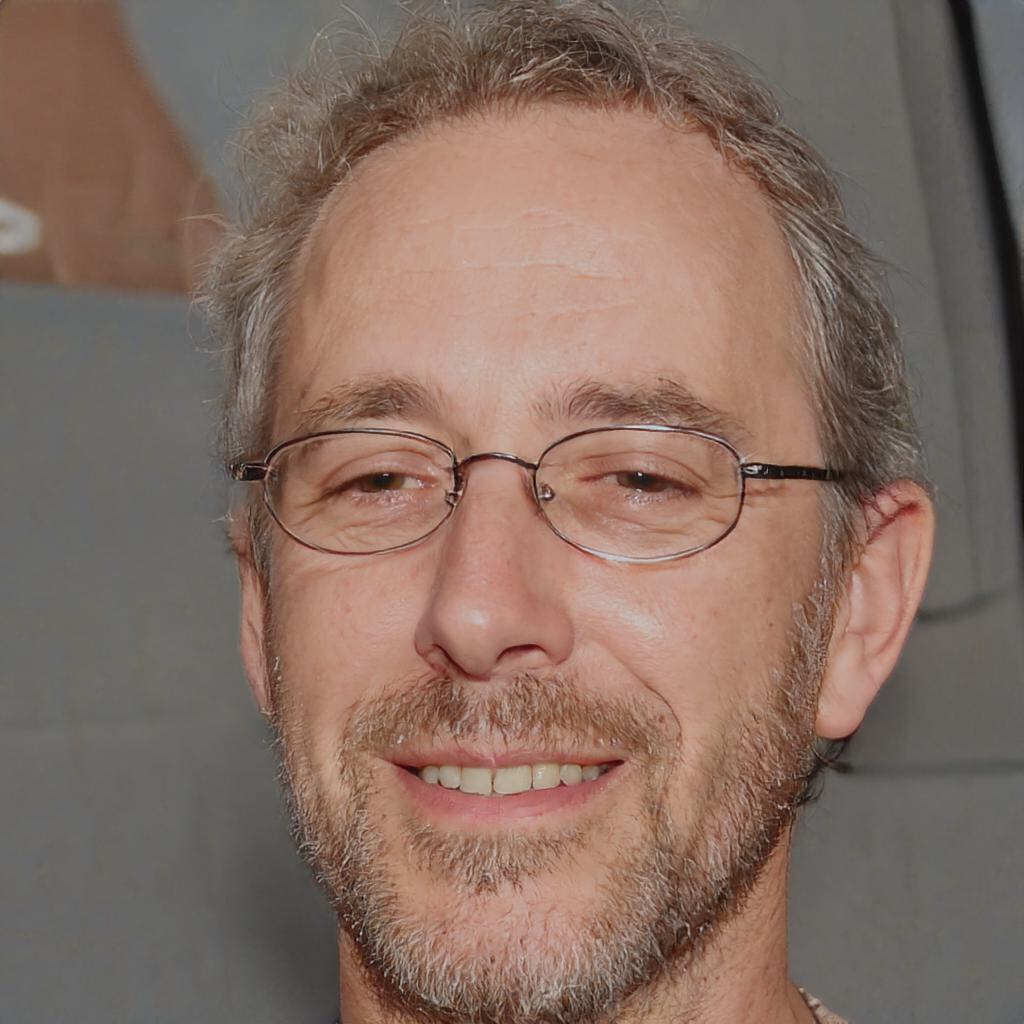

→

🔊Rap god

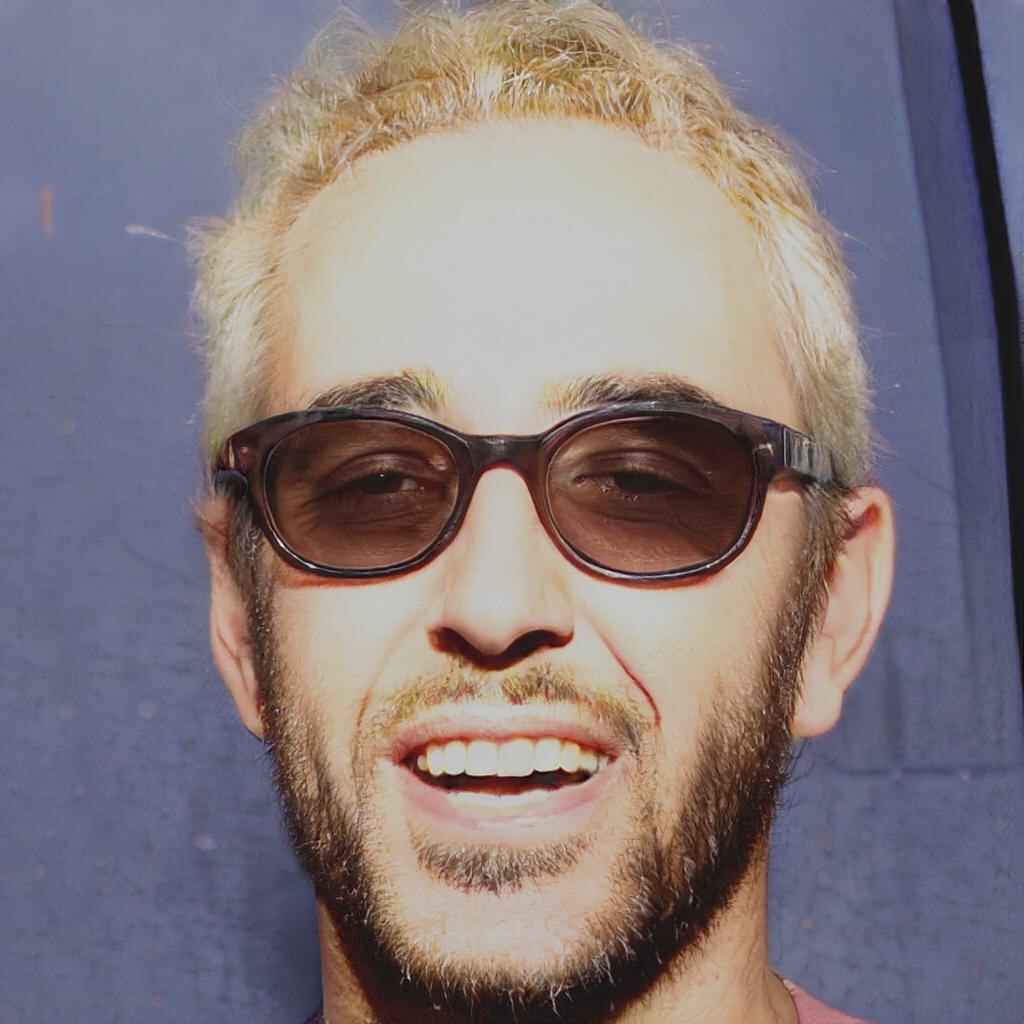

Manipulation Results - Failure Cases

mode oscilation

radical style transition

gender transition